ChatGPT sends AI sector into frenzy, but here’s why it won’t overtake our jobs… yet

Tech

Tech

Since its release about a month ago, ChatGPT has generated feverish excitement and a fundraising frenzy for the AI space.

Millions have been amazed at the potential and seemingly endless opportunities for generative AI ChatGPT, a chatbot built on OpenAI’s latest natural language processing algorithms, GPT-3.5.

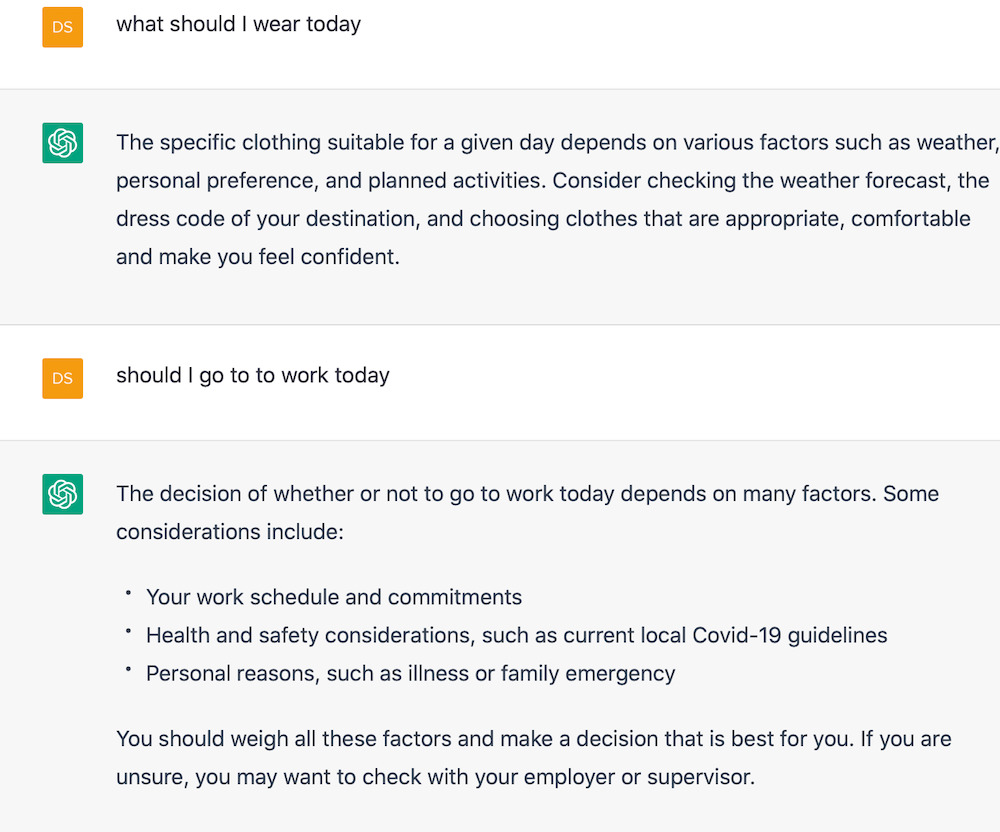

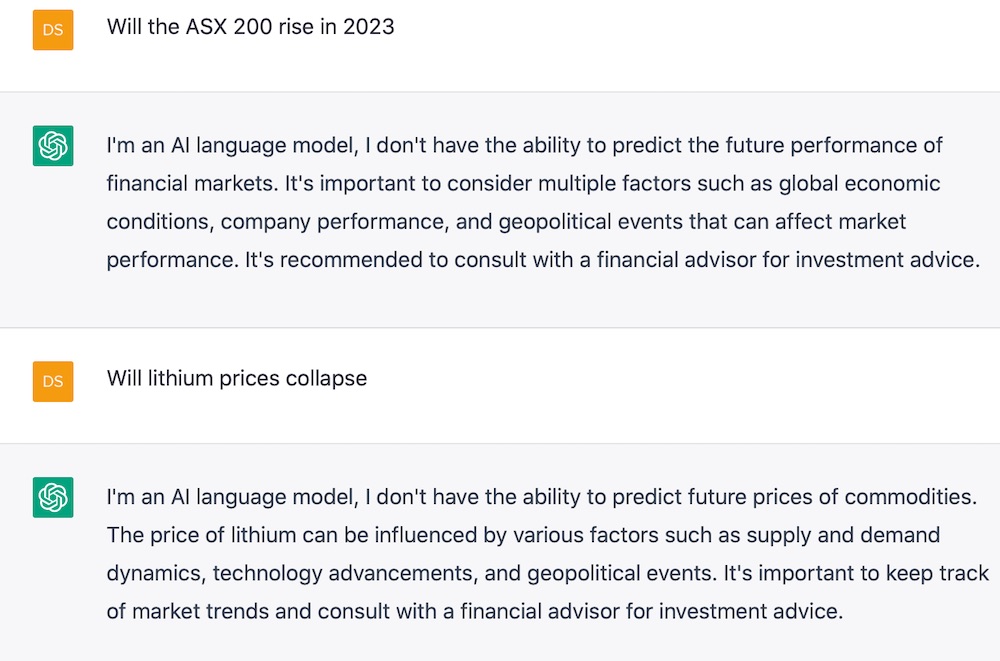

ChatGPT’s platform is indeed quite impressive, capable of generating human-like text responses to a wide range of open-ended prompts.

It can apparently perform other slick tricks too – like passing a Wharton MBA exam, answering complex Amazon job interview questions, and generating viral social media contents.

But its limitations are also obvious and on more pertinent topics, ChatGPT seems to produce nothing more than fence-sitting responses:

Kai Riemer, Professor of Information Technology and Organisation at the University of Sydney, explained to Stockhead that ChatGPT was built on ‘large language model’ or what’s sometimes called neural networks.

“These are basically large statistical models that ingest vast amounts of text documents and analyse the structure of these text documents,” Riemer told Stockhead.

It memorises how human language works, and what words normally follow other words.

“If you ingest billions of documents from the internet with all kinds of text in them into these models, and you train them on supercomputers over weeks and months, what you get is what’s called a language prediction machine.”

Riemer explained that ChatGPT’s responses make it look like it’s a knowledge machine, when in reality it is just a language machine.

“When ChatGPT gets it wrong, people say ‘Oh look, ChatGPT made a mistake’.

“But it doesn’t really make a mistake in the classical sense that it forgot something or got something wrong. It just means that the texts it has learned sometimes don’t have the correct answer.

“So the machine is inherently unreliable, but in many instances, the answers are correct or they sound correct,” Riemer said.

It shouldn’t therefore be too surprising to discover that ChatGPT had passed business and law school exams, because the model was trained on billions of texts which include business textbooks.

“ChatGPT looks like it does know things but we need to remind ourselves that it does not actually trade in knowledge.

“I’m not saying that they’re not valuable or useful, but we need to take them for what they are, and with a grain of salt,” Riemer added.

Some experts have warned that generative AI like ChatGPT could make white collar jobs obsolete, potentially disrupting many sectors.

At the moment however, it’s difficult to predict which particular sectors would be most impacted.

“If you could predict what that change would be, it wouldn’t be disruptive. It’s the difficulty in making predictions that makes things disruptive,” Riemer said.

He believes that ChatGPT will not do away with any job or profession per se, but instead will be a tool that could enhance and change the way we work.

This tool will also be much more valuable in the hands of people who already have expert skills, rather than enabling novices to climb to the level of experts, says Riemer.

“At the end of the day what we really need is AI fluency, or the understanding of AI in both the general population and among business leaders,” Riemer said.

“It’s more than just literacy and knowing what AI does, it’s the ability to work with AI effectively.

“One of the skills the 21st century is the ability to work with AI.”

Investment frenzy over generative AI has gripped both Silicon Valley and Wall Street since the launch of ChatGPT.

The share price of Nasdaq-listed media company BuzzFeed surged by more than 300% in the last two sessions after the company told employees it will start using OpenAI’s technology for its online quizzes and content.

Over in Silicon Valley, San Francisco-based AI startup Anthropic is being valued at US$5billion after raising roughly US$300m in new funding.

Back home, there are also pureplay AI stocks on the ASX.

AI translations company Straker (ASX:STG) saw revenue rocket up in the first half of the financial year as it locked in a further three years with computer giant IBM.

The company’s product can also be used on apps such as Microsoft Teams and Slack.

Icetana (ASX:ICE) is another stock in the space, with its AI software solution popular among US state prison end users.

icetana’s Motion Intelligence is AI-driven video analytics surveillance software designed to automatically identify unusual or unexpected events in real time.

Appen (ASX:APX) provides data for developing AI products and machine learning, delivering AI products and services to many of the world’s largest tech companies and Fortune 500 customers globally.

And there’s BrainChip Holdings (ASX:BRN), a company involved in neuromorphic computing, a type of AI that simulates the functionality of the human neuron and is working on commercialisation of its Akida chip.

In the ETF space, Betashares’ Global Robotics and Artificial Intelligence ETF (ASX: RBTZ) could also be an option for investors wanting to get a diversified exposure in AI.

RBTZ invests in companies involved in industrial robotics and automation, non-industrial robots, AI, and unmanned vehicles and drones.

Its top 10 portfolio holdings (as of 31 Jan) include ABB, Keyence Corp, Nvidia, Fanuc and Omron.